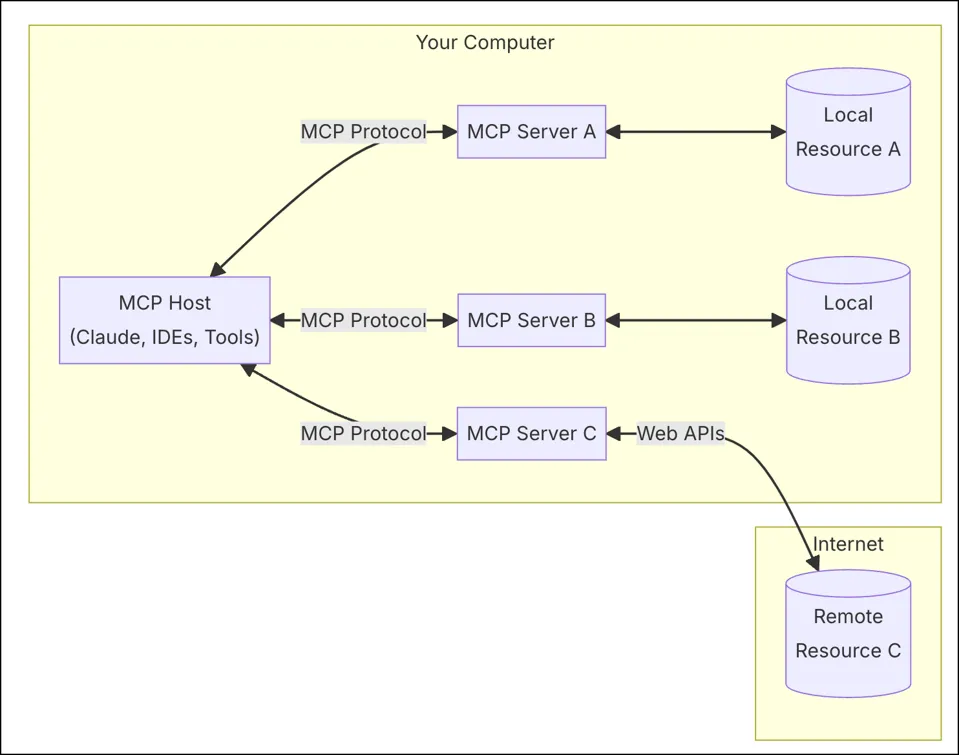

Understanding MCP (Model Context Protocol)

In today's fast-paced AI era, one of the most difficult tasks for developers is seamlessly connecting large language models (LLMs) to the data sources and tools required...

Read more →

I’m a researcher and data scientist with hands-on experience in computer vision, deep learning, and generative AI. My current work focuses on advancing agentic AI and multimodal large language models (LLMs). I hold a Master of Science in Data Science from the University of Massachusetts Dartmouth.

With over three years of industry experience in data science and machine learning, I’ve built scalable, real-world AI solutions—from working as an ML Engineer at Tiger Analytics to developing a conversational LLM agent during my Summer 2024 internship at Johnson & Johnson Innovative Medicine. I'm passionate about solving complex problems, pushing the boundaries of AI research, and transforming emerging ideas into impactful, real-world solutions.

This is a collection of my hands-on projects in agentic AI, generative AI, LLMOps, computer vision, and MLOps. Each project jumps into a key concept or framework in AI and ML, often supported by practical implementations or tutorials.

In today's fast-paced AI era, one of the most difficult tasks for developers is seamlessly connecting large language models (LLMs) to the data sources and tools required...

Read more →

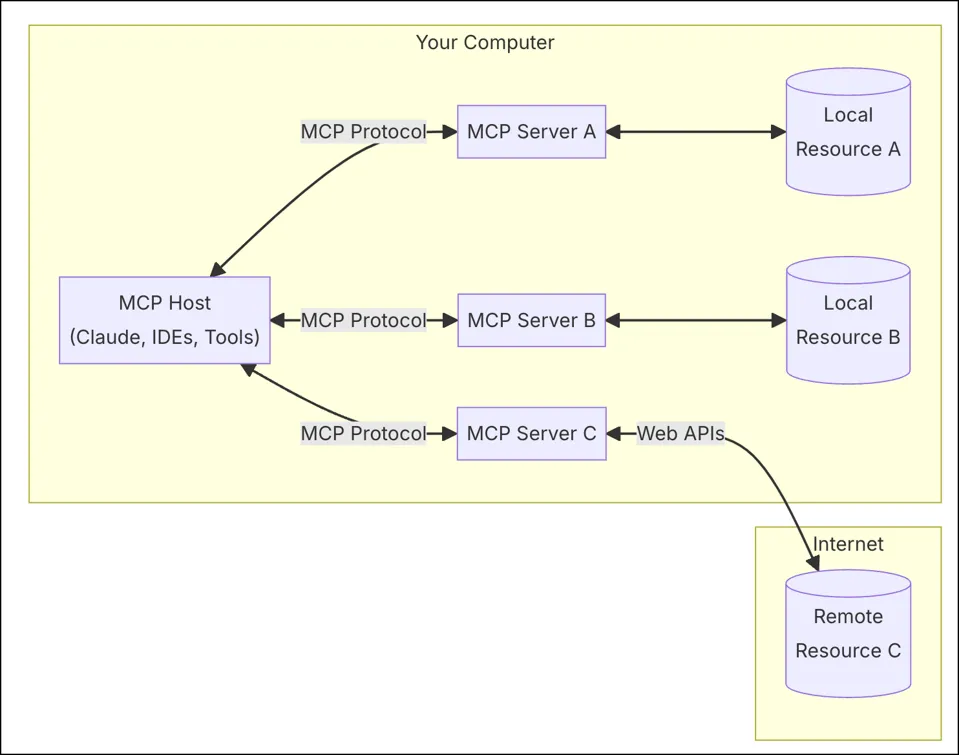

Large Language Models (LLMs) are initially trained on vast, different text corpora scraped from the internet. This pre-training phase teaches them statistical...

Read more →

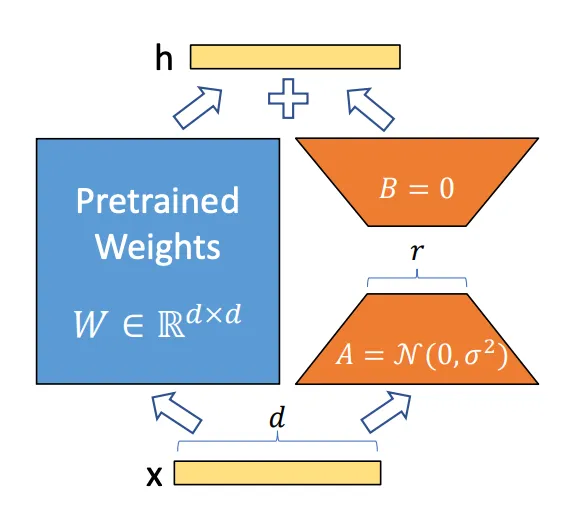

Contrastive Language-Image Pre-training (CLIP) was developed by OpenAI and first introduced in the paper “Learning Transferable Visual Models From Natural...

Read more →Feel free to connect or explore more on my GitHub or LinkedIn.